How Deduplication in Clickhouse works? (Avoiding FINAL keyword for better performance)

Check out OLake, our latest open source offering for replicating databases to lakehouses.

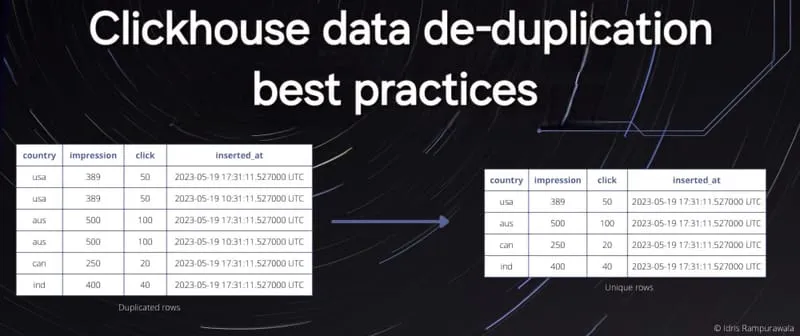

Clickhouse is optimised for its ingestion and reading speed to make your data near-real time latency, some trade-offs are made. De-duplication is something that Clickhouse doesn't guarantee in normal table.

There are several strategies in Clickhouse for achieving this. Generally you ingest all of the data first and then try to de-duplicate later.

Example Scenario :

We have a hits_rmt table which has around 110million rows and contains data of website hits which might get updated later creating duplicates.

Important columns:

-

updated_timewhich gets updated when record is updated. -

WatchIDis the unique id of each event.

We are simply trying to figure out which hits have a column value as FUniqID = '7411978401145070562'.

Now when we run query it returns 39 rows with time of 1.534 sec as run time. In this there are only 13 unique rows with unique primary key.

select * from hits_rmt where FUniqID = '7411978401145070562'

Now we want to de-duplicate to get latest updated rows only.

Following are the different ways of writing SQL queries to get the de-duplicated data in Clickhouse. Clickhouse is optimised for its ingestion and reading speed to make your data near-real time latency, some trade-offs are made one of them being de-duplication happening in a disjointed manner post the data is ingested.

Depending on your volume of underlying data, use case and available memory resourcesfor most optimum performance one of the following method of querying will come in handy

Option 1 - Using ReplacingMergeTree with Final

Usually in Datazip we create all tables as with table engine as ReplacingMergeTree when you ingest. In this table engine, Clickhouse automatically runs a background async de-duplication while merging data. The dedupe happens on order by columns that you choose while creating the table.

CREATE TABLE default.hits_rmt

(

`WatchID` Int64,

`JavaEnable` Int16,

`Title` String,

`GoodEvent` Int16,

`EventTime` DateTime,

`FUniqID` Int64,

...

...

...

`updated_time` DateTime

)

ENGINE = ReplacingMergeTree

ORDER BY WatchID

SETTINGS index_granularity = 8192

In Datazip ingestion console, you can configure it either manually or default is a unique column called eventn_ctx_event_id generated by Datazip.

The Dedupe operation is triggered at some point in time decided by Clickhouse automatically and not necessarily run before you query. So you might still encounter duplicates as seen above.

Now you can force Clickhouse to Dedup things on-the-fly at runtime of query by using a keyword called final.

select * from hits_rmt final where FUniqID = '7411978401145070562'

As you can see now we only get 13 rows in result but query time has increase almost 100x. This option is good for small tables, but we should preferably avoid it when querying big tables.

In Datazip when you ingest the data, you get normal physical ReplicatedMergeTree table as _table123_raw_ and a view called _table123_ which is a simple query like

select * from table123_raw final

Option 2 - SubQuery strategy

This strategy is a fast and effective but you need to figure out how to achieve this using simple logic :

Example :

SELECT *

FROM hits_rmt

WHERE (WatchID, updated_time) IN (

SELECT

WatchID,

max(updated_time)

FROM hits_rmt

WHERE FUniqID = '7411978401145070562'

GROUP BY 1

)

This works in almost similar time as you are querying without final

Option 3 - argMax function

Calculates the arg value for a maximum val value.

This is preferred if you are only selecting some columns. Read more about it here.

Example :

SELECT

WatchID,

argMax(Title, updated_time),

argMax(EventTime, updated_time)

FROM hits_rmt

WHERE FUniqID = '7411978401145070562'

GROUP BY WatchID

It only executes in 0.277 sec.

Option 4 - Limit n by Col1, Col2

A query with the LIMIT n BY expressions clause selects the first n rows for each distinct value of expressions. Read More

SELECT *

FROM hits_rmt where FUniqID = '7411978401145070562'

ORDER BY WatchID, updated_time DESC

LIMIT 1 BY WatchID

It only took 0.589 secs to execute it.

Using all of these strategies you can leverage power of lightning fast queries in clickhouse without compromising on de-duplication.

You can read more about how to further reduce memory usage in Clickhouse queries Solve memory limit errors & speed up queries in Clickhouse

About Datazip

Datazip is an AI-powered Data platform as a service, giving the entire data infrastructure from ELT (Ingestion and transformation framework), storage/warehouse to BI in a scalable and reliable manner, making data engineering and analytics teams 2-3x more productive.

Ready to revolutionize your data warehousing strategy? Contact us at [email protected] to learn more about how Datazip can benefit your organization.